The buzz over the last year and a half around AI has raised awareness of Generative AI systems (Gen AI) and large language models (LLMs) and shone a spotlight on the issues and gaps in these systems, specifically regarding bias and discriminatory practices.

Here are some definitions (courtesy of Google’s Gemini) so we’re all on the same page.

Gen AI: It is a system that uses machine learning, algorithms, and training data to generate content based on user inputs or prompts. Content can include text, images, music, video, code, and more. Gen AI models are bidirectional, taking cues from human inputs and prompts to shape their content production.

LLMs: Are programs that use deep learning to analyze large amounts of data to understand human language and perform natural language processing (NLP) tasks. LLMs can recognize, generate, summarize, translate, and predict text and other content. They can also understand how words, sentences, and characters work together and recognize distinctions between different pieces of content.

By now, we’ve all seen the headlines and examples of clear biases in the output from prominent Gen AI solutions and LLMs. Earlier this year, UNESCO shared a study highlighting sex and gender biases in prominent LLMs.

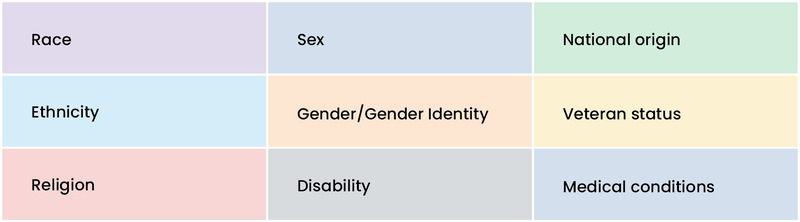

The list of potential biases is long, to say the least. They can include:

According to the CompTIA 2024 AI Outlook, 22% of companies are pursuing the integration of AI across a wide variety of products and workflows, and another 33% are engaging in limited implementation of AI.

With so many companies and government entities considering adoption or having already implemented AI and LLMs into their products, processes, and workflows concerns about potential bias and discriminatory practices grow.

The table above clearly illustrates that biases and discrimination have a direct impact on all people, whether they are part of a historically marginalized community or not.

Where do biases in our AI systems come from? It’d be easy to declare this a technology issue, but it stems from human, systemic, and institutionalized biases resident in our digitized world. When systems use web data and information for training, they reflect the biases present in that training data and it becomes part of any output from that system.

As for systemic or institutional bias – that bias can come directly from that institution’s data and inadvertently exclude people. An example of this is a hiring tool that learned the features of a company’s employees (predominantly men) rejected women applicants for spurious and discriminatory reasons; resumes with the word “women’s,” such as “women’s chess club captain,” were penalized in the candidate ranking.1

Real-world examples show that these ingrained biases can impact a person’s financial well-being, the ability to get hired, medical treatment, mental health, and an overall potential loss of opportunity.

To ensure fairer AI systems – it’s universally understood that data privacy, transparency, and accountability have to be at the forefront of all internal use policies.

These policies must be coupled with the ability to detect existing biases in those systems and accompanied by mitigation plans.

So what does implementation look like?

Eliminating bias in AI begins with data anonymization. Data anonymization is nothing new; industries like Healthcare, Financial Services, and Telecommunications have been doing it effectively for years. Government organizations also use it to protect Personally Identifiable Information (PII).

One component of data anonymization that can preserve privacy while preserving data utility for AI applications is pseudonymization. Pseudonymization anonymizes data by replacing any identifying information with a pseudonymous identifier. Across industries, Pseudonymization protects personal information like first and last names, addresses, and Social Security Numbers.

Additional techniques that can help preserve privacy are federated learning and differential privacy, which enable data analysis without compromising privacy.

Data anonymization has pros and cons. Teams must decide how they utilize PII data to ensure they pursue the right approach.

Transparency is key to reducing bias and discrimination in AI systems. It’s a pretty broad term – what it means in terms of AI systems is there is an openness and clarity of AI systems, this applies to their algorithms, decision-making processes, and data sources. Areas to focus on for data transparency include:

Transparency is a critical component of responsible AI, as it helps ensure that decisions are made fairly, ethically, and without bias—most importantly, that these efforts are shared with system users.

Accountability involves establishing processes and frameworks to create an oversight and monitoring function over these systems to ensure that they operate as intended and do not cause unintended consequences.

The common elements between transparency and accountability are that both of these functions require active monitoring and reporting on the adherence to stated policies, guidance, and compliance with all applicable regulations. As with most technology solutions, these need to be audited and tested to ensure the controls are working as intended, not causing new issues, and any subsequent bias is identified.

Bias and discrimination exist in these systems, and the solutions that can help reduce them in AI systems are nothing ‘new.’ They are tried-and-true approaches that multiple industries have used for years to protect sensitive data and create and maintain the confidence of consumers, regulators, and investors. As these systems are being developed, companies need to consider whether speed to market is more important than developing systems that have enshrined fairness and are not actively reinforcing societal bias.

1 – Jeffrey Dastin. Amazon scraps secret AI recruiting tool that showed bias against women. Reuters. Oct. 10, 2018. https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G